Technical SEO Audit: Identifying and Fixing Common Issues

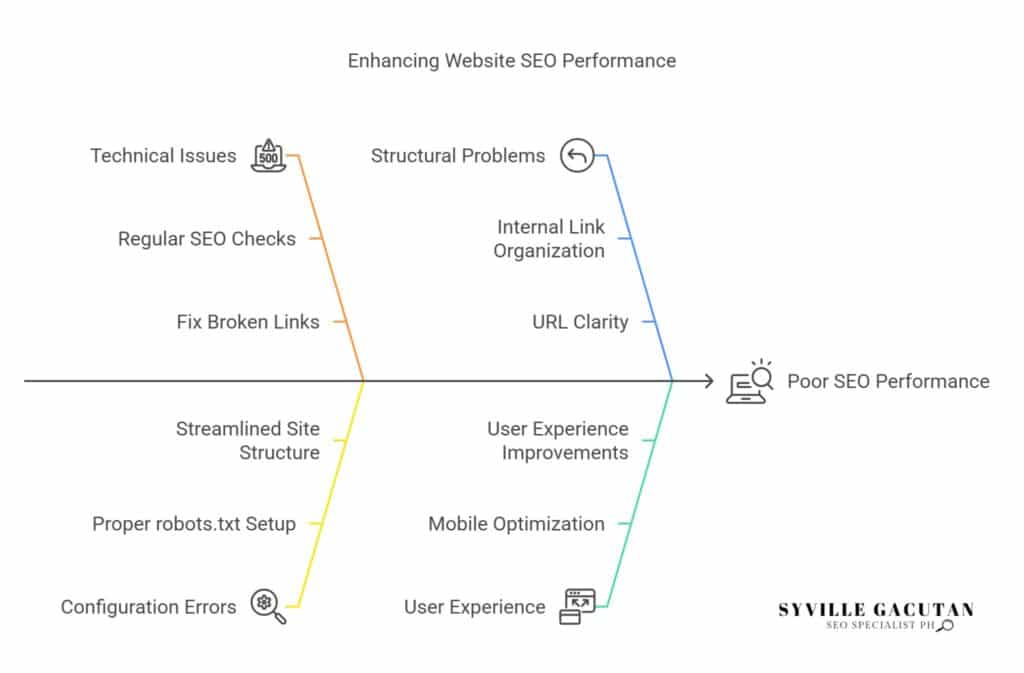

A technical SEO audit is essential for boosting your website’s visibility by spotting issues that can hurt its search engine rankings. Common problems include poor site structure, difficulty for search engines to crawl, and a lack of mobile optimization. Improving page speed by optimizing images and reducing script size is also important, as well as securing your site with SSL certificates. Fixing broken links and refining your XML sitemaps help ensure smooth navigation and proper indexing. Regularly checking your indexing status and eliminating duplicate content can further improve your SEO. By following best practices in these areas, you can significantly enhance your website’s performance in search results.

Key Takeaways

- Regular SEO checks: Use tools like Screaming Frog to regularly find and fix any technical issues on your site.

- Set up robot.txt properly: Ensure your robot.txt file is correctly configured to block sensitive or duplicate content from search engines.

- Streamline site structure: Organize your internal links and URLs clearly to help search engines crawl your site more easily.

- Mobile optimization: Make sure your website looks great and works smoothly on mobile devices to improve user experience and rankings.

- Fix broken links regularly: Use tools to find broken links and set up 301 redirects to keep your site running smoothly.

Importance of Technical SEO

In today’s fast-changing world of digital marketing, technical SEO is key to making sure your website is visible and easy for search engines to access. Understanding its importance is vital for maintaining a strong online presence. By following SEO best practices, you can ensure your site meets search engine requirements, increasing the likelihood of ranking higher in search results. This is especially important as search engine algorithms are regularly updated, continually changing how websites are evaluated and ranked.

A strong content strategy relies on a solid technical SEO foundation. Without it even the most engaging content may go unseen by the intended audience. Technical SEO ensures that all aspects of a website, from metadata to structured data, are optimized for search engines to index them properly. This optimization improves user experience by ensuring fast loading times, mobile compatibility, and an intuitive navigation structure—factors that are crucial for keeping site visitors engaged and reducing the number of people who leave the site quickly.

Moreover, site maintenance is another critical aspect of technical SEO. Regular audits and updates ensure that a website remains compliant with the latest SEO guidelines and algorithm changes. This proactive approach prevents potential issues that could hinder a website’s performance in search results.

As search engines evolve, so too must the technical aspects of a website, ensuring it remains a viable and competitive digital asset.

Understanding Crawlability

Crawlability is a fundamental aspect of technical SEO that determines how effectively search engine bots can access and index the pages of a website. Ensuring optimal crawlability involves addressing various factors that can either facilitate or hinder search engines’ ability to navigate your site.

Without proper attention to these elements, your website’s visibility in search results may be compromised, impacting its overall performance.

To enhance your website’s crawlability, consider the following key components:

- Crawl Depth: This refers to the number of clicks required to reach a specific page from the homepage. A shallow crawl depth ensures that important pages are easily accessible, allowing search engines to index them more efficiently. Aim to keep essential content within three clicks from the homepage for optimal crawlability.

- robot.txt: This file provides instructions to search engine bots on which parts of a website should be crawled or ignored. Proper configuration of the robot.txt file is crucial to prevent indexing of sensitive or duplicate content, ultimately optimizing the crawl budget and focusing bots’ attention on valuable pages.

- Crawl Budget: This represents the number of pages a search engine crawls on your site within a given timeframe. Balancing crawl budget is essential for large websites, where inefficient use of this resource can result in important pages being overlooked.

- URL Structure and Sitemap Hierarchy: A clear, logical URL structure, complemented by a well-organized sitemap, helps guide search engine bots through your site’s content. Proper hierarchy ensures that the most critical pages are prioritized during crawling, enhancing their likelihood of being indexed and ranked effectively.

Analyzing Site Architecture

Effective site architecture serves as the backbone of a well-optimized website, directly influencing both user experience and search engine optimization. A well-planned site hierarchy ensures that users and search engines can easily navigate through the website, leading to improved engagement and higher rankings.

Site hierarchy involves organizing content logically, allowing users to find information swiftly and search engines to index content efficiently. This organization enhances user navigation, making it intuitive and seamless.

Content organization plays a crucial role in site architecture, as it dictates how content is grouped and presented. Proper content organization aids in creating a coherent URL structure, which should be simple, descriptive, and reflective of the content it represents.

A sound URL structure helps both users and search engines understand the relationship between pages, enabling efficient crawling and indexing by search engines.

Internal linking is another pivotal component of effective site architecture. It facilitates the connection between different pages within a website, distributing link equity and helping search engines discover and prioritize important content.

Strategic internal linking can guide users through a logical flow of information, enhancing their overall experience and increasing the likelihood of conversions.

Enhancing Mobile-Friendliness

Amidst the focus on site architecture and its impact on user navigation and search engine indexing, prioritizing mobile-friendliness is imperative for any comprehensive SEO strategy. As mobile devices dominate web traffic, ensuring a seamless user experience on these devices is crucial.

A pivotal element in enhancing mobile-friendliness is embracing responsive design. This design approach ensures that web content adapts fluidly to various screen sizes, offering optimal viewing regardless of the device used.

Conducting rigorous mobile testing is essential to identifying potential issues affecting the user experience. Here’s a checklist to guide you:

- Responsive Design: Ensure your website automatically adjusts to fit the screen size of any device, from smartphones to tablets, without compromising on visual or functional aspects.

- Viewport Settings: Properly configure viewport settings to control the page’s dimensions and scaling on different devices, ensuring content is displayed as intended.

- Touch Targets: Verify that buttons, links, and other interactive elements have appropriately sized touch targets, minimizing user frustration and errors by preventing accidental clicks.

- User Experience: Continuously evaluate and enhance the overall user experience by ensuring that navigation is intuitive, content loads correctly, and important information is easily accessible.

Effective mobile optimization requires a thorough understanding of these components and their interplay. Addressing these areas not only improves user satisfaction but also boosts search engine rankings, as search engines increasingly prioritize mobile-friendly websites.

Improving Page Speed

Page speed plays a critical role in both user experience and search engine optimization, influencing how quickly content loads and is rendered to visitors. A sluggish site can deter users and lead to a higher bounce rate, impacting search rankings. To improve page speed, several technical strategies can be employed.

Image optimization is a crucial step; oversized images can significantly slow down page loading times. Using appropriate formats, such as WebP, and compressing images without sacrificing quality can drastically reduce their file size. Tools like TinyPNG or ImageOptim can automate this process, making it efficient and effective.

Script minification involves removing unnecessary characters from code, such as spaces and comments, without altering functionality. This reduces the file size and enhances load times. Tools like UglifyJS for JavaScript or CSSNano for CSS can streamline this task.

Caching strategies are another vital aspect of speeding up web pages. By storing frequently accessed data in cache, repeated visits can load faster as the server does not need to re-fetch unchanged resources. Implementing browser caching and using Content Delivery Networks (CDNs) can improve load times for returning users.

Server response time is also key; a slow server can bottleneck performance. Optimizing database queries, upgrading hosting plans, or using faster server technologies can enhance response times.

Lastly, resource compression via techniques like Gzip or Brotli can shrink the size of HTML, CSS, and JavaScript files sent to the browser, accelerating the loading process.

Ensuring Secure Connections

Beyond enhancing page speed, another critical aspect of optimizing a website is ensuring secure connections, which is foundational for both user trust and search engine rankings.

A secure website not only protects user data but also meets the expectations of search engines like Google, which prioritize secure sites in search results. Implementing SSL certificates is the first step in safeguarding your site. An SSL certificate encrypts data transmitted between the user’s browser and your server, ensuring that sensitive information remains confidential.

The transition from HTTP to HTTPS implementation is crucial. It signals to both users and search engines that your site is secure. However, during this process, be mindful of mixed content—where a site loads both secure (HTTPS) and non-secure (HTTP) resources. Mixed content can compromise the security of your site, resulting in warnings to users and potentially lowering your SEO rankings.

Consider the following steps to ensure comprehensive security:

- SSL Certificates: Secure your site with a valid SSL certificate to encrypt data and verify authenticity.

- HTTPS Implementation: Transition all site pages and resources to HTTPS to maintain a secure environment.

- Mixed Content: Identify and update any non-secure elements to prevent security risks.

- Secure Headers: Implement HTTP headers that fortify your site’s defense against common web vulnerabilities.

Fixing Broken Links

A critical component of maintaining a healthy website is the identification and resolution of broken links. Broken links not only impede search engine crawlers but also degrade the user experience, leading to frustrated visitors and potential loss of business. Effective link management strategies are essential for addressing these issues and enhancing website performance.

To efficiently manage links, one must first utilize reliable tools for detection. These tools, such as Google Search Console, Screaming Frog, or Ahrefs, can scan websites to identify any broken links. By systematically reviewing reports from these tools, webmasters can pinpoint links that lead to 404 errors or outdated pages.

Once broken links are identified, implementing redirect best practices is crucial. Redirects, such as 301 redirects, seamlessly guide users and search engines from a broken link to a functional URL, preserving the user experience and retaining search engine rankings. However, it is vital to ensure that redirects are strategically planned to avoid creating redirect chains or loops, which could further complicate navigation.

Furthermore, ongoing monitoring techniques are indispensable in preventing future link-related issues. Regular audits, perhaps quarterly, can help track the health of a website’s link structure.

Automation tools can assist in setting up alerts for any newly detected broken links, enabling prompt action.

Optimizing XML Sitemaps

When it comes to enhancing search engine visibility and indexing efficiency, optimizing XML sitemaps is paramount. A well-structured XML sitemap acts as a roadmap, guiding search engines to the crucial parts of a website. An optimal XML structure ensures that each URL is accurately represented, enhancing the likelihood of proper indexing.

Here are critical steps to optimizing XML sitemaps:

- Correct XML Structure: Ensure that the XML sitemap adheres to the correct syntax and structure. Each URL entry should include essential elements like ‘<loc>’, ‘<lastmod>’, ‘<changefreq>’, and ‘<priority>’. This structured format helps search engines understand the significance and update frequency of each page.

- Sitemap Submission: After creating the XML sitemap, submit it to search engines like Google Search Console and Bing Webmaster Tools. This submission process informs search engines of the site’s structure, promoting more efficient crawling and indexing.

- Priority Settings: Utilize the ‘<priority>’ tag to indicate the importance of different pages. While this does not dictate ranking, it guides search engines on which pages to crawl more frequently. Pages like homepage or key landing pages might have higher priorities than less crucial pages.

- Image Inclusion: If the website is image-heavy, consider including image data within the XML sitemap. This step aids search engines in indexing images, thus enhancing visibility on image search results.

Regular updates to the XML sitemap are crucial as websites evolve. By consistently maintaining and modifying the sitemap, one ensures that no new or updated content remains undiscovered, ultimately boosting the site’s SEO performance.

Reviewing Indexing Status

Optimizing XML sitemaps plays a significant role in guiding search engines through a website’s architecture, yet it is equally important to monitor how effectively these efforts translate into successful indexing.

Reviewing indexing status is a critical step in ensuring a website’s visibility and accessibility on search engine results pages. Utilizing indexing tools such as Google Search Console or Bing Webmaster Tools can provide insights into which pages have been indexed and highlight potential indexing challenges.

To develop effective indexing strategies, it is essential to regularly evaluate the indexing metrics provided by these tools. Metrics such as the total number of indexed pages, crawl errors, and coverage issues offer a snapshot of a site’s indexing health. Through this examination, webmasters can identify discrepancies between submitted and indexed pages, allowing them to refine their approach.

Indexing challenges often arise from technical issues such as server errors, robots.txt restrictions, or noindex directives inadvertently preventing critical pages from being indexed. Addressing these issues is part of indexing best practices, ensuring that all intended pages are discoverable by search engines.

Regular audits of a site’s robots.txt file and meta tags can prevent such obstacles. Incorporating indexing best practices includes maintaining a clean and up-to-date sitemap and ensuring that internal linking structures promote efficient crawl paths.

Addressing Duplicate Content

Duplicate content’s impact on SEO is a critical issue that demands meticulous attention from webmasters. When identical or substantially similar content appears across multiple URLs, search engines can struggle to determine which version is most relevant, potentially diluting page rankings. To address this, employing effective duplicate content strategies becomes imperative.

- Canonical Tag Implementation: One of the most efficient ways to tackle duplicate content is by using canonical tags. By specifying a preferred URL for content that exists in multiple places, webmasters can guide search engines to the primary source, ensuring that link equity is properly consolidated.

- Content Consolidation Techniques: In instances where duplicate content is unavoidable, such as with similar product descriptions or articles, consolidating content into a single comprehensive page can be beneficial. This reduces redundancy and provides users with a more valuable resource, enhancing user engagement and SEO performance.

- Automated Content Detection: Utilizing automated tools to detect duplicate content is crucial. These tools can swiftly identify content issues across large websites, allowing for prompt corrective action. By regularly scanning for duplicates, webmasters can maintain a clean, optimized site structure.

- User Generated Content Management: Managing user-generated content (UGC) is another layer of complexity. Duplicate issues often arise from comments or reviews. Implementing moderation strategies and using noindex tags on pages with excessive UGC can help prevent these issues from affecting SEO.

Final Thoughts

A technical SEO audit is essential for maintaining and improving your website’s visibility and performance in search engine results. By addressing key areas such as crawlability, site architecture, page speed, mobile optimization, and security, you can significantly enhance user experience and ensure search engines can effectively index your site. Regular audits, fixing broken links, optimizing XML sitemaps, and managing duplicate content will help maintain a healthy, search-friendly website. Staying proactive with your technical SEO efforts ensures that your site stays compliant with the latest SEO standards, providing a solid foundation for long-term success.

If you’re ready to take your website’s performance to the next level with a comprehensive technical SEO audit, connect with Syville Gacutan, an experienced SEO Specialist in the Philippines. Syville can help you identify and resolve technical issues, optimize your site for search engines, and ensure it remains competitive. Don’t leave your SEO to chance—get expert support today!