2023 Technical SEO Fundamentals: Essential Checklist

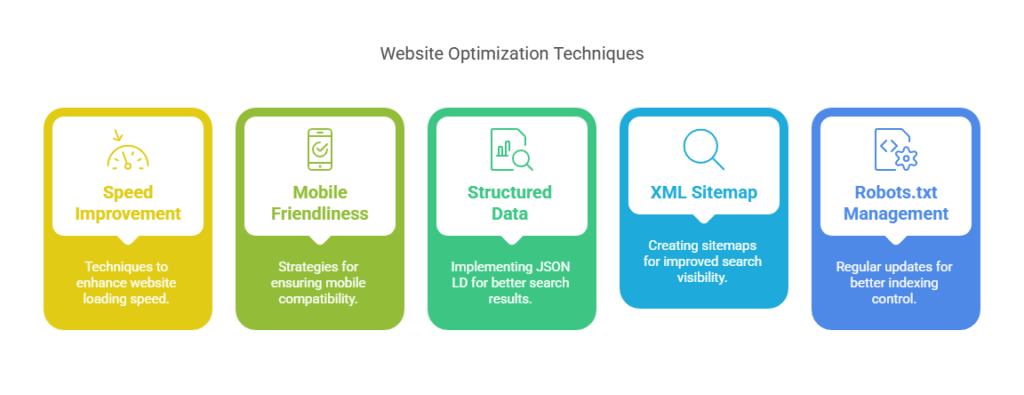

To optimize your website for 2023, focus on several key technical SEO fundamentals. Prioritize site speed by compressing images and implementing browser caching. Ensure mobile-friendliness through a responsive design and proper viewport settings. Implement structured data using JSON LD to enhance search visibility and rich snippets. Regularly update XML sitemaps and fine-tune robots.txt files for efficient search engine crawling. Employ HTTPS for security and boost search rankings. Address crawl errors with effective redirects and maintain a robust internal linking strategy. Understanding and applying these technical SEO fundamentals checklist 2023 will greatly improve search engine performance and user engagement on your site.

Key Takeaways

- Use Gzip file reduction, browser caching, server response time, and picture compression to increase the speed of your website.

- Make sure your website is mobile-friendly by using responsive design, appropriate viewport settings, and sizable touch targets to encourage user interaction.

- Use the JSON LD format to implement structured data for rich snippets and improved search visibility.

- For better content visibility and effective search engine crawling, create and maintain an XML sitemap.

- To control search engine access and improve indexing, update and validate robots.txt on a regular basis.

Site Speed Optimization

How important is site speed to the functionality and user experience of your website? The response is unquestionably important. A sluggish website can result in higher bounce rates and worse user satisfaction in the modern digital world, where consumers demand fast access to information. In order to stay competitive and improve search engine results, site performance optimization is not merely a luxury.

Important image optimization strategies are essential for increasing site speed. You may drastically cut down on load times by reducing the size of photographs without compromising quality. This procedure is streamlined with the aid of programs like ImageOptim or TinyPNG, which results in quicker content delivery. Reducing server response time is also crucial. This can be accomplished by picking a trustworthy hosting company and fine-tuning your server configuration to process requests more quickly.

Using browser caching is another essential element. The browser doesn’t have to reload the full page when static files are stored locally on users’ devices, making subsequent page views faster. By using appropriate caching headers, you can make sure that your website is ready for quick access.

Compression methods, such as Gzip, further enhance performance by reducing the size of your site’s files. This compression ensures that less data is transferred between server and client, accelerating page load times. Combined with a content delivery network (CDN), which distributes content across various locations globally, these strategies ensure that your website is consistently fast, regardless of user location.

Mobile-Friendliness

In the realm of digital connectivity, mobile-friendliness has emerged as a pivotal factor in a website’s overall success. As mobile devices become the primary means for internet access, ensuring that websites provide an optimal experience for mobile users is essential. This begins with implementing a responsive design, which allows a website to adapt seamlessly to various screen sizes and orientations, thus enhancing mobile usability.

Responsive design is not just about appearance; it directly impacts user engagement and conversion rates. Websites must be easy to navigate on smaller screens, and this is where touch targets become crucial. Touch targets should be large enough to be easily tapped without causing frustration, ensuring that users can interact with the website efficiently.

Viewport settings are another critical aspect of mobile-friendliness. By specifying the viewport settings, developers ensure that a site scales correctly on different devices, maintaining usability and readability. Proper viewport configuration prevents horizontal scrolling and content overflow, issues that can severely impair the user experience on mobile devices.

Furthermore, Google’s mobile indexing emphasizes the importance of mobile-friendliness. With mobile-first indexing, Google predominantly uses the mobile version of the content for indexing and ranking. Therefore, a site that is not optimized for mobile usage may suffer in search engine rankings, affecting its visibility and traffic.

Structured Data Implementation

Structured data implementation plays a crucial role in enhancing a website’s search visibility by providing search engines with explicit information about a page’s content. By using structured data types, webmasters can ensure that their pages are better understood by search engines, potentially leading to the generation of rich snippets in search results. These rich snippets, which can include elements such as ratings, pricing, and availability, not only improve the visual appeal of a search result but also increase the likelihood of user engagement.

One of the most popular formats for structured data is JSON LD format, which is preferred for its ease of use and compatibility with various content management systems. Implementing JSON LD allows for seamless data markup without disrupting the existing HTML structure of a webpage.

Below is an overview of key aspects of structured data implementation:

| Aspect | Details |

| Structured Data Types | Define different entities like products, articles, and events. |

| Rich Snippets | Enhanced search results showing additional information like reviews. |

| JSON LD Format | A lightweight, easy-to-implement format for structured data. |

| Schema Validation | A process to ensure your data markup is accurate and error-free. |

Schema validation is a critical step in structured data implementation. Tools such as Google’s Structured Data Testing Tool or Schema.org‘s validator can identify errors and guide necessary corrections. This ensures that the data markup is correctly interpreted by search engines, maximizing the benefits of structured data.

XML Sitemap Creation

An XML sitemap serves as a roadmap for search engines, allowing them to efficiently discover and index a website’s pages. By providing a clear structure, XML sitemaps enhance the visibility of a site’s content, ensuring that even pages buried deep within the site hierarchy receive appropriate attention. The XML sitemap benefits are extensive, as they help in optimizing crawl efficiency and improving the overall SEO performance of a website.

Creating an XML sitemap involves selecting suitable sitemap file formats, with XML being the most widely accepted by search engines. However, formats like RSS and Atom can also be beneficial in specific scenarios. Utilizing XML sitemap tools such as Google’s XML Sitemaps Generator or Screaming Frog can simplify the creation process, offering automated solutions to generate comprehensive sitemaps tailored to a site’s unique structure.

Once generated, submitting the XML sitemap is crucial. The sitemap submission process typically involves uploading the sitemap file to the website’s root directory and then informing search engines through platforms like Google Search Console or Bing Webmaster Tools. This step ensures that search engines are aware of the sitemap, enabling them to crawl the website more effectively.

Maintaining an up-to-date XML sitemap is equally important. Regularly updating the sitemap to reflect changes in the site’s structure, such as new pages, updated content, or removed pages, is essential for sustaining optimal search engine indexing. Following sitemap maintenance tips, such as scheduling periodic reviews and employing automated tools, can streamline this process, ensuring that the sitemap remains a reliable resource for search engines.

Robots.txt Configuration

Navigating the digital landscape effectively often hinges on the strategic use of a robots.txt file. This critical tool allows webmasters to manage how search engine crawlers interact with their site, ensuring optimal indexing of content. Proper robots.txt configuration is key to maintaining an efficient website presence.

- Robots.txt Best Practices: Ensure that your robots.txt file is up-to-date and accurately reflects your site’s structure. It should be located in the root directory of your website and must be accessible to search engines. Regular audits can help identify any misconfigurations that may hinder search engine visibility.

- Disallow Directives: Use disallow directives judiciously to prevent search engines from accessing sensitive or irrelevant areas of your site. For example, disallowing the ‘/admin’ directory can prevent search engines from crawling administrative pages. However, be cautious, as excessive use of disallows can inadvertently block important content.

- User Agent Specifications: Tailor your robots.txt file to accommodate different search engine crawlers by specifying user agents. This allows you to customize instructions for each search engine, optimizing the way they index your content. For instance, you might enable full access for Googlebot while restricting Bingbot’s access to certain sections.

- Crawl Delay Settings: Implementing crawl delay settings can help manage server load by controlling how often a crawler accesses your site. This is particularly useful for websites with limited bandwidth or those experiencing performance issues due to high crawl rates.

Utilizing testing tools to validate your robots.txt file is essential. These tools can simulate how search engines interpret your directives, allowing you to ensure that your configuration aligns with intended goals and preserves site integrity.

HTTPS and Security

Securing your website with HTTPS is a fundamental aspect of technical SEO that complements the strategic management of search engine crawlers. By implementing HTTPS, which stands for Hypertext Transfer Protocol Secure, you are essentially ensuring that data transferred between your website and its users is encrypted. This is achieved through the use of SSL certificates, which authenticate the identity of your website and enable secure connections. The role of SSL certificates cannot be overstated as they are pivotal in establishing a secure environment for online interactions.

Data encryption is at the heart of HTTPS, safeguarding sensitive information from being intercepted by malicious parties. This not only protects your users but also enhances your website’s credibility. The HTTPS benefits extend beyond security; they are instrumental in building trust. When users see the padlock icon in their browser’s address bar, it serves as a trust signal, reassuring them of the site’s legitimacy and safety. This trust can translate into improved user engagement and retention, which are crucial for SEO performance.

Additionally, search engines like Google have recognized the importance of secure connections, considering HTTPS as a ranking factor in their algorithms. This means that websites secured with HTTPS may have a competitive edge in search engine results pages (SERPs), further justifying the investment in SSL certificates. Ultimately, transitioning to HTTPS is an indispensable move for any website committed to safeguarding user data and optimizing its SEO performance, reinforcing both user trust and search visibility.

Canonicalization Strategy

A well-executed canonicalization strategy is crucial for managing duplicate content issues and ensuring the correct version of a webpage is prioritized by search engines. By leveraging canonical tags, website owners can guide search engine indexing effectively, thus enhancing their site’s SEO performance. Implementing a sound canonicalization strategy involves several key steps that align with SEO best practices, thereby optimizing the URL structure and minimizing the risks associated with duplicate content.

- Identify Duplicate Content: Begin by conducting a thorough audit of your website to identify duplicate content. This includes identical or highly similar pages, such as product pages with various sorting options or URLs with tracking parameters.

- Apply Canonical Tags: Once duplicate content is identified, apply canonical tags to indicate the preferred version of a webpage. This informs search engines about which URLs to prioritize in the search engine indexing process, ensuring that link equity is directed to the correct page.

- Ensure Consistent URL Structure: A consistent URL structure is imperative. Ensure that your canonical tags reflect the primary URL you want indexed. Avoid using dynamic parameters in canonical URLs, as they can complicate search engine understanding.

- Monitor and Adjust Strategy: Regularly monitor your website’s performance and adjust your canonicalization strategy as needed. Use tools such as Google Search Console to track how well your canonical tags are being respected by search engines, and adjust accordingly if discrepancies are found.

Crawl Error Resolution

Effective crawl error resolution is a critical component of technical SEO, vital for ensuring that search engines can access and index your website’s content efficiently. The process begins with a thorough understanding of how search engines allocate a crawl budget, which refers to the number of pages a search engine will crawl during a given timeframe. Optimizing this budget is essential to ensure that important pages are indexed promptly, while avoiding unnecessary server strain.

Error tracking is the first step in resolving crawl errors. Utilizing tools like Google Search Console can help identify issues such as 404 errors, server errors, and DNS problems. Regular monitoring enables you to swiftly address these issues before they negatively impact your site’s search performance. Proper server response is crucial; ensure that your server is configured to handle requests efficiently, thus preventing downtime and slow load times that could deter crawlers.

URL inspection tools offer insights into how search engines perceive your URLs. This allows you to verify if pages are being indexed properly and identify any discrepancies that may hinder their visibility. In addition, redirect management is a necessary aspect of this process. Implementing appropriate 301 and 302 redirects ensures that both users and search engines are directed to the correct pages, preventing the occurrence of dead ends that lead to crawl errors.

Internal Linking Strategy

Addressing crawl errors paves the way for an effective internal linking strategy, which plays a pivotal role in enhancing a website’s SEO performance. Internal links serve as a roadmap for search engines and users, guiding them through your content and establishing the authority of your pages. To optimize your internal linking strategy, consider the following essential elements:

- Anchor Text: Utilize descriptive and relevant anchor text to convey the content of the linked page. This not only aids search engines in understanding the context but also improves user engagement by attracting clicks from interested visitors.

- Link Hierarchy: Develop a clear link hierarchy that highlights the most important pages on your site. Prioritize links to cornerstone content, ensuring these pages receive the most link equity and visibility. This hierarchical structure helps search engines prioritize and index vital content.

- Contextual Relevance: Ensure links are contextually relevant within the content they are placed. This relevance enhances the user experience and reinforces the semantic connections between topics, providing a logical path for both users and crawlers to follow.

- Regular Link Audits: Conduct regular link audits to identify broken links, redirect chains, and orphaned pages. By resolving these issues, you maintain the integrity of your internal linking structure and prevent user frustration.

Implementing these strategies not only bolsters SEO performance but also enhances user experience. An effective internal linking strategy seamlessly integrates anchor text, link hierarchy, and contextual relevance, forming a cohesive web of interconnected content. Regular link audits ensure this network remains robust and efficient, ultimately leading to improved page rankings and user satisfaction.

Schema Markup Usage

Schema markup, a crucial component of technical SEO, enhances the way search engines interpret and display website content. By incorporating structured data through schema types, businesses can significantly improve their visibility in search results. This not only aids search engines in understanding the content context but also facilitates the generation of rich snippets. Rich snippets, such as reviews, ratings, or product details, can increase click-through rates by making search results more informative and appealing to users.

Various schema types are available, each catering to specific content categories such as articles, events, products, and local businesses. Selecting the right schema type is essential for maximizing the SEO benefits of structured data. Proper implementation ensures that search engines can extract the intended information accurately, thereby enhancing the website’s overall visibility and credibility.

However, the process of implementing schema markup is not without challenges. One of the primary implementation challenges involves ensuring that the markup is both syntactically correct and contextually relevant to the content. Errors in markup can lead to missed opportunities in search visibility or even penalties in search rankings. Therefore, regular markup testing using tools such as Google’s Structured Data Testing Tool is vital to validate the accuracy and effectiveness of the applied schema.

The SEO benefits of schema markup are undeniable, yet its successful deployment requires a strategic approach. Understanding the nuances of different schema types and diligently testing markup for errors can lead to significant improvements in search performance. Ultimately, schema markup serves as a powerful tool in the technical SEO arsenal, driving enhanced visibility and engagement in search results.

Final Thoughts

Mastering technical SEO fundamentals in 2023 is key to building a solid foundation for your website’s success. By optimizing site speed, ensuring mobile-friendliness, and implementing structured data, you can significantly enhance both user experience and search engine visibility. Regular updates to XML sitemaps, strategic management of crawl budgets, and resolving crawl errors help maintain a smooth search engine indexing process. Additionally, robust internal linking, HTTPS security, and the use of schema markup improve SEO performance and user trust. By consistently applying these best practices, you can ensure long-term growth and a competitive edge in search rankings.

If you’re ready to elevate your website’s technical SEO performance, contact Syville Gacutan, an experienced SEO Specialist in the Philippines. Syville can help you optimize your site, boost search visibility, and achieve your digital marketing goals. Connect today for expert SEO solutions!